Data Crawling

- Home

- Our Services

- Data Crawling

Our data provision service offers an automated solution for systematically navigating through websites. We employ web crawlers – often referred to as spiders or bots – to gather relevant data such as text, images, links, and both structured and unstructured information.

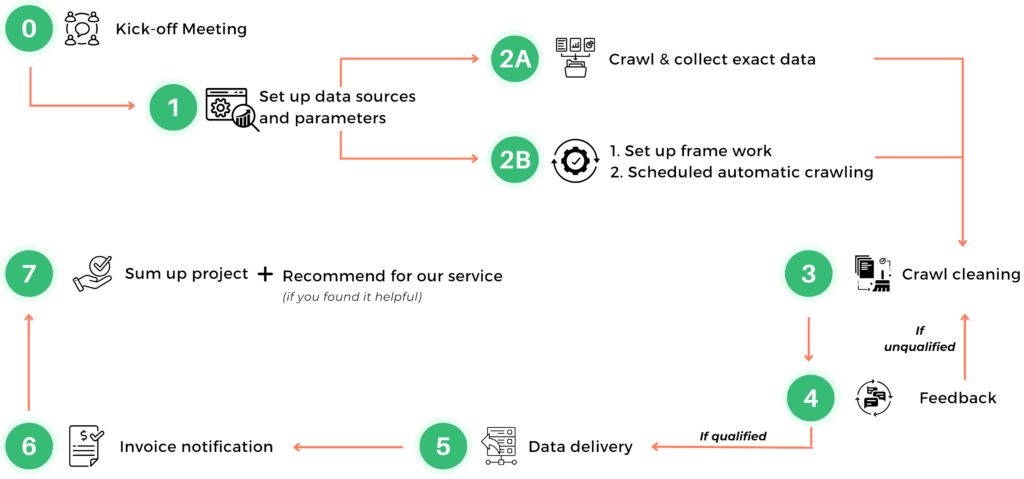

Working Process

Our Cases Study

E-commerce Product matching project

A toner e-commerce company needed a solution to collect URLs and details of competitor products matching specific criteria for competitive analysis and strategic decision-making.

Language/Tool: NodeJS, MySQL, cheerio, axios

Solution:

- Developed a tailored web crawling tool that collects URLs and product information from competitor websites based on predefined criteria.

- Extracts relevant data, incorporates anti-blocking measures, and stores the data in a structured database for analysis.

Hotel booking and travel updating data project

The client needs to extract comprehensive information from Rakuten Travel such as hotel names, up-to-date prices, tourism boards, user reviews, etc.

Language/Tool: Python, pandas, Mysql, selenium, Beautiful Soup, SQLAlchemy

Solution:

- Track price fluctuations hourly, helping the booking platform offer the most competitive prices.

- Use collected data to train AI and recommend relevant hotels in a timely manner to customers.

- Provide market insights or seasonal events for a clear perspective when setting business goals.

Market positioning analyze project

The client aims to gather data on all Bosch’s installers across Germany from a website, ensuring comprehensive coverage by using location and radius as input parameters.

Language/Tool: Python, pandas, Mysql, selenium, SQLAlchemy

Solution:

- Develop a tool using geo-coordinates of German cities and provinces to query Bosch’s installer search, extracting names, addresses, and services.

- The data is compiled and delivered as an Excel file with anti-blocking measures and error handling for smooth operation.

Clients feedback

Marcel Claßen

Product Manager